In order to monitor results I’ve used Micrometer to provide and endpoint to enable Prometheus to read JVM metrics. Why did I do this? To make sure there was no difference between the different tests by time related differences I did not account for all JVMs were put under the same load at the same time. In front of the 4 JDKs I’ve put an haproxy instance to load balance requests.

My setup consisted of a docker-compose file which allowed me to easily start 4 times a reactive Spring Boot application running on the different JVMs. That’s why I choose to perform all measures simultaneously. This example might be rather far fetched though but you can think of other reasons by measures taken at different times can differ. This might cause adding new data might be slower and this could influence the second JVM performance measures. During the second run, the Prometheus database might be filled with more data. For example I’m using Prometheus to gather measures. Even when first testing a single JVM and after the test, test another single JVM, the results will not be comparable since you cannot role out something has changed. This can have various causes such as different CPUs pickup the workload and those CPUs are also busy with other things or I’m running different background processes inside my host or guest OS. How can you be sure that all measures are conducted under exactly the same circumstances? When I run a test against a JVM and run the same test scenario again tomorrow, my results will differ.

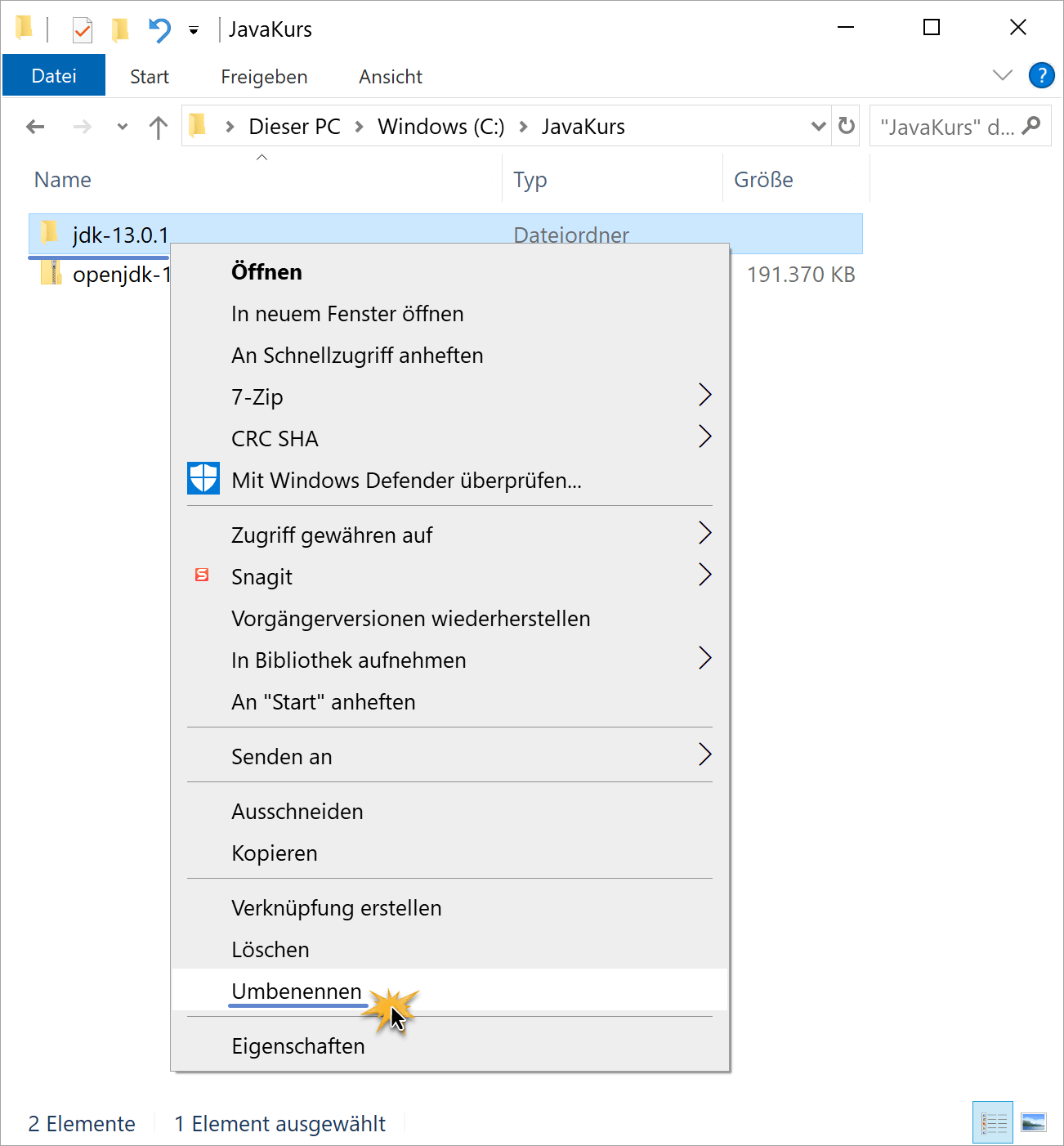

OPENJDK 13 SOFTWARE

The software to generate load and monitor the JVMs I assigned to specific CPUs not shared by the JVMs processing the load. Thus I migrated my docker-compose file back to version 2 format which does allow assigning specific CPUs to test this. You can imagine assigning a specific CPU in a potentially multi host environment is not trivial. This is because the people working on Docker appear to want to get rid of docker-compose (which is a separately maintained Python wrapper around Docker commands) in favor of docker stack deploy which uses Docker Swarm and maybe Kubernetes in the future. In addition, the version 3 format does not support assigning resource constraints at all when you use docker-compose to run it. The version 3 docker-compose format does not support assigning a specific CPU to a process. Using docker-compose to configure a specific CPU for a process is challenging. Assigning a specific CPU and memory to a process

The findings did not change under these different circumstances.

I also compared startup, under load and without load situations. Assigning specific resources to the processes (using docker-compose v2 cpuset and memory parameters) did not seem to greatly influence the measures of individual process load and response times. I also did several tests which put resource constraints on the load generating software, monitoring software and visualization software (assign different CPUs and memory to those resources). For example assign a dedicated CPU and a fixed amount of memory. How can you be sure there is not something interfering with your measures? Of course you can’t be absolutely sure but you can try and isolate resources assigned to processes. Everything was running in Docker containers except SoapUI. My test application consisted of a reactive (non-blocking) Spring Boot REST application and I’ve used Prometheus to poll the JVMs and Grafana for visualization. I also looked at the effect of resource isolation (assigning specific CPUs and memory to the process). In this blog I’ll describe a setup I created to perform tests on different JVMs at the same time. Solid performance research however is difficult. Which would be the best to use? This depends on various factors. There are many different choices for a JVM for your Java application.

0 kommentar(er)

0 kommentar(er)